Skills

To Know more about my skillset please click on the image.

Welcome to my journey towards Data Science and Machine Learning

To Know more about my skillset please click on the image.

To get to know about my learning and certifications please click on the card.

I am involved in various co-curricular activities beyond my learning.

click on quick links at home screen to acess Linkedin, Kaggle and Medium.

Digital Lab IT Intern AI/ML Sep 10th 2020 - Dec 18th 2020.

Project: Data Engg and Product Development on - Amazon web services (AWS).

Reporting Manger : Kiran Jaladi.

Contact details : Contact me for further details.

Developed a real time data ingestion framework using Python which can ingest data simultaneously into Apache SOLR, AWS Dynamo DB and S3. The framework resulted in retirement of a legacy system.

Application development using Python OOP for quoting home and auto insurance. Implemented the code on amazon workspace and utilized AWS services like Dynamo DB, S3, Lambda and state machine.

To expedite the process of generating the insurance quote I researched about implementing a Machine Learning model on my own interest.

Systems Engineer Jan 1st 2018 - July 5th 2019.

Project: American Family Insurance - Cloud Data Lake on Amazon web services (AWS).

Reporting Manger : Omprakash Rangaraju.

Contact number : Contact me for further details.

Development of Python based tools for data quality check from on-premise Hadoop servers to cloud AWS and thereby reducing the time for validation to 1/3rd of usual time. The tool had a record time of 4mins to valuate 1million data.

Closely working with onsite team, solving the issues on data type changes and timestamp related issues. Creating spark data frames in order to analyze differences in data and mainly performing data profiling.

Working with AWS EMR service to access the large data sets from cloud, creating tables from S3 buckets in order to support data analytics team. Also, I worked on Dynamo DB extracting the complex JSON and parsing them to CSV. As part of my project I created tables and loaded the data to hive.

Developed a tool using python and Unix shell to review the data migrated to snowflake cloud, the tool resulted in time reduction for the investigation and it could compare 1 million data under 4mins.

Creating project report on day to day basis by creating SAS dashboards, Microsoft Excel dashboards and present the same to the onsite lead & client.

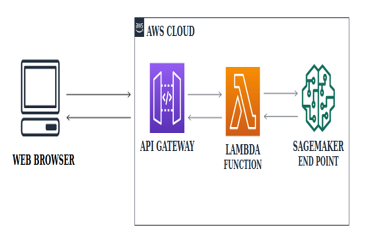

End goal will be to have a simple web page which a user can use to enter a movie review and some tech like Lambda function and API Gateway.

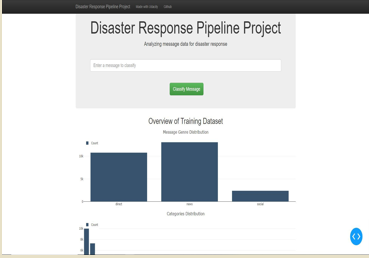

The message dataset contains pre labeled messages from real life disaster events. This Project builds a Naural Language Processing (NLP) model to categorize the messages.

The Main Goal of this project is to figure out if the customer will respond to the offers given to them through mobile app, email and social media.

plagiarism detector that examines an answer text file and performs binary classification; labeling that file as either plagiarized or not.

Tableau is a powerful BI tool and cloud based. click on the card to view my tableau make over monday competetion challenges.

This project builds out a number of different methods for making recommendations that can be used for different situations.

This project provides the key insights from the Airbnb Seattle dataset using the CRISP-DM approach. The model provides an insight on how prices are decided

This project report explores the Naïve - Bayes and Logistic regression classifiers, discusses the implementation of both the classifiers.

This project covers concepts in web development, It utilized plotly,HTML,CSS and Pandas to build a dashboard for census data. Deep dive into census data.

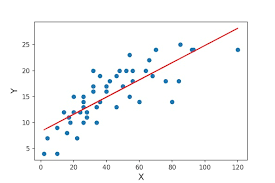

This project creates a Linear regression model function which does not uses Scikit Learn. The Function handles both single and multiple output datasets.

This Package will implement dummy columns for a column with multiple values.The output will be the dataframe with all the dummy columns attached to the original dataframe and the original column with multiple values will be dropped.

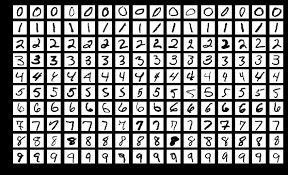

Classification Tutorial Using TensorFlow Keras and Deep learning. This project classifies MNIST handwritten dataset (0-9) into categories.

"I've figured out my learning curve. I can look at something and somehow know exactly how long it will take for me to learn it". John Mayer.

Glimpse on what I recently learnt - Click the Learning & certifications tab at home to find out more -> Clickhere.

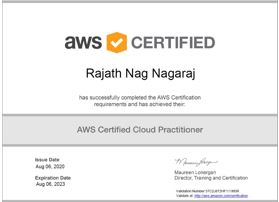

The AWS Certified Cloud Practitioner examination is intended for individuals who have the knowledge and skills necessary to effectively demonstrate an overall understanding of the AWS Cloud, independent of specific technical roles addressed by other AWS Certifications..

Practical approach to deep learning for software developers. The course involved hands-on experience building your own state-of-the-art image classifiers and other deep learning models.

This exam is for those who have foundational skills and understanding of Tableau Desktop and at least three months of applying this understanding in the product.